When it is not Normal... The Mann-Whitney Test

The Mann-Whitney Test

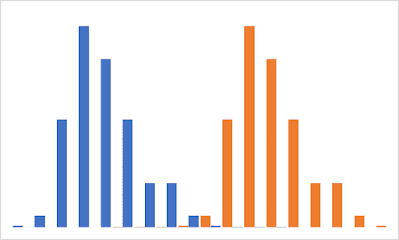

Mann-Whitney test helps you compare two sets of data when they are not normally distributed. I would use Mann-Whitney test only after I confirm using the Anderson-Darling test. I should add that I run a box-plot first before running the AD test.

I used to think that Mann-Whitney compares the medians of two data sets. But in the process of implementing Mann-Whitney in Excel LAMBDA, I found out that Mann-Whitney test compares the mean ranks, in doing so, it determines if the two data set came from the same population.

Step 1: Group the two samples together and sort the data in ascending order, but retain their sample origin.

Step 2: Rank the combined data.

Step 3: Separate the data back into the two samples. Sum the ranks for each sample.

Step 4: Compute the test statistics U

`U_1 = R_1 - (n_1(n_1+1))/2` and `U_2 = R_2 - (n_2(n_2+1))/2`

Step 5: Choose the small U to calculate the equivalent z-statistics.

`z = (U - m_U) / sigma_U`

where `m_U` and `sigma_U` are the mean and standard deviation of `U` given by

`m_U = (n_1n_2)/2`

`sigma_U = sqrt( (n_1 n_2 (n_1 + n_2 + 1) / 12)`

Step 6: Calculate the p-value

`p = NORM.S.DIST(z, TRUE) x 2`

Implementing Mann-Whitney Test in LAMBDA

When I was calculating the steps for the Mann-Whitney test, I used the Excel formula RANK.AVG. However this formula could not be used in LAMBDA. It does not accept arrays only references to ranges. Otherwise the implementation would be a lot simpler. Hence in this implementation you will find a number of Excel formula acrobatics to get the same results as RANK.AVG.

Step 1: I combine the two samples

size_1, COUNT(array_1),

size_2, COUNT(array_2),

array_column, TOCOL(VSTACK(array_1, array_2), 3),

array_size, size_1 + size_2,

Step 2: Then sort the combined array, sort them, do some acrobatics to find the ranks in back in their samples.

array_sorted, SORT(array_column, , 1, FALSE),

array_rsorted, SORT(array_column, , -1, FALSE),

array_first, MATCH(array_sorted, array_sorted, 0),

array_last, array_size - MATCH(array_sorted, array_rsorted, 0) + 1,

array_rank, (array_first + array_last)/2,

rank_1, INDEX(array_rank, MATCH(array_1, array_sorted, 0)),

rank_2, INDEX(array_rank, MATCH(array_2, array_sorted, 0)),

Step 3: I then sum the ranks

rank_sum_1, SUM(rank_1),

rank_sum_2, SUM(rank_2),

Step 4: Then I calculate the U statistics and choose the smaller value

u_statistics_1, rank_sum_1 - (size_1*(size_1+1)/2),

u_statistics_2, rank_sum_2 - (size_2*(size_2+1)/2),

u_statistics, IF(u_statistics_1 < u_statistics_2, u_statistics_1, u_statistics_2),

Step 5: The mean, standard deviation, and z-statistics of U is then calculated

mean, size_1 * size_2/2,

stdev, SQRT(size_1*size_2*(size_1+size_2+1)/12),

c_continuity_correction, IF(u_statistics > mean, -0.5, 0.5),

z_statistics, (u_statistics - mean + c_continuity_correction) / stdev,

Step 6: Finally the p-value is calculated

z_statistics, (u_statistics - mean + c_continuity_correction) / stdev,

I read the continuity_correction is required when dealing with discrete data.

Putting It All Together

Below is the culmination of the effort. You may notice additional line codes not mentioned above. These are pre-checks, intermediate values, and for pretty printing.

dcrNonParametric.MannWhitney.Test

=LAMBDA(array_1, array_2, [tail], [show_details],

LET(tail, IF(ISOMITTED(tail), 0, tail),

show_details, IF(ISOMITTED(show_details), FALSE, show_details),

array_1, FILTER(array_1, array_1<>""),

array_2, FILTER(array_2, array_2<>""),

size_1, COUNT(array_1),

size_2, COUNT(array_2),

array_column, TOCOL(VSTACK(array_1, array_2), 3),

array_size, size_1 + size_2,

array_sorted, SORT(array_column, , 1, FALSE),

array_rsorted, SORT(array_column, , -1, FALSE),

array_first, MATCH(array_sorted, array_sorted, 0),

array_last, array_size - MATCH(array_sorted, array_rsorted, 0) + 1,

array_rank, (array_first + array_last)/2,

rank_1, INDEX(array_rank, MATCH(array_1, array_sorted, 0)),

rank_2, INDEX(array_rank, MATCH(array_2, array_sorted, 0)),

rank_sum_1, SUM(rank_1),

rank_sum_2, SUM(rank_2),

u_statistics_1, rank_sum_1 - (size_1*(size_1+1)/2),

u_statistics_2, rank_sum_2 - (size_2*(size_2+1)/2),

u_statistics, IF(u_statistics_1 < u_statistics_2, u_statistics_1, u_statistics_2),

mean, size_1 * size_2/2,

stdev, SQRT(size_1*size_2*(size_1+size_2+1)/12),

c_continuity_correction, IF(u_statistics > mean, -0.5, 0.5),

z_statistics, (u_statistics - mean + c_continuity_correction) / stdev,

pvalueOneTail, NORM.S.DIST(z_statistics, TRUE),

pvalueTwoTail, NORM.S.DIST(z_statistics, TRUE) * 2,

pvalue, SWITCH(tail, 0, pvalueTwoTail, 1, pvalueOneTail, "Tail:Two Tail=0, One Tail=1"),

details, HSTACK(

VSTACK(

"N1", "N2", "Rank Sum 1", "Rank Sum 2", "U1", "U2",

"U", "Mean", "StdDev", "Continuity correction",

"z_statistics", "pvalueOneTail", "pvalueTwoTail"

),

VSTACK(

size_1, size_2, rank_sum_1, rank_sum_2, u_statistics_1, u_statistics_2,

u_statistics, mean, stdev, c_continuity_correction,

z_statistics, pvalueOneTail, pvalueTwoTail

)

),

IF(show_details, IFNA(details,""), pvalue)

)

)

Seeing Mann-Whitney In Action

Suppose you are given two sets of data in Columns B and C shown below. Using RANK.AVG, we can easily get the rank of each in the combined set. And from there we can calculate the rank sum as Ra and Rb. Unfortunately RANK.AVG could not be used in LAMBDA as we are working with arrays. The acrobatics in Step 2 became necessary.

In case you are wondering why the values seems slightly different, this is due to rounding by Excel when the column widths are smaller.

We now have a way to compare non-parametric data distributions using Mann-Whitney test.

Next, I will be looking at ANOVA and Kruskal-Wallis tests.

Please continue to visit DC-DEN!

Comments

Post a Comment