Linear Discriminant Analysis LDA - Using Linear regression for classification

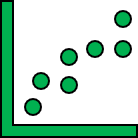

Linear Discriminant Analysis LDA uses linear regression to supervise the classification of data. Essentially you assign each class a numerical value. Then use linear regression method to calculate the projection of your observations to the assigned numerical values. Finally you calculate the thresholds to distinguish between classes. Essentially LDA attempts to find the best linear function that separates your data points into distinct classes. The above diagram illustrates this idea. Implementing LDA using LAMBDA Fit Steps in implementing LDA's Fit : 1. Find the distinct classes and assign each with an arbitrary value - UNIQUE and SEQUENCE . 2. Designate each observation with the arbitrary assigned value depending on its class - XLOOKUP . 3. Find the linear regression coefficients for this observations - dcrML.Linear.Fit . 4. Project each observation on the linear regression - dcrML.Linear.Predict . 5. Find the threshold of each class - classCutOff from the spread ...