Out-of-the-Box Part 4 - Variance Testing with F.TEST

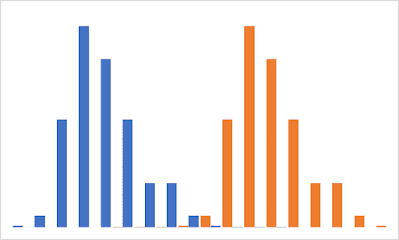

We wrap up our "Out of the Box" series with the F.TEST function, used to check if the variances of two samples are equal. Variance is simply the square of the standard deviation `sigma^2`, which measures how spread out data is around the mean. Since variance and standard deviation are mathematically linked, we use the terms interchangeably. The F.TEST formula is simple: F.TEST(array1, array2) where array1 and array2 are your data ranges, which don’t need to be the same size. Visualizing Variance with Box Plots In the previous post, we looked at box plots for five sample distributions. From the box plots, you might think that samples A, B, and D have the same spread , while C and E are different . However, these box plots only show quartiles (25% to 75% of the data) and may not accurately reflect variance. Using the F.TEST formula, we find that while sample A's variance differs from sample B's, sample C's variance is similar to B's. As it turns out sample...